CogniSwitch: An Ontology-Governed Approach to Enterprise AI

Digital twin of enterprise knowledge. That's our northstar for us at CogniSwitch. I'll be honest - digital twin is a loaded word. Thanks for the ai-washing, the word's meaning has been diluted. That aside, in our effort of engaging with customers across industries - manufacturing, retail, banking - we hit the same objection "Seems like the team wants to build it."

A custom GPT. A simple RAG solution. A few weeks, maybe days.

They'd come back three to six months later. The problem wasn't solved. But they still weren't quite ready to move. We saw this pattern repeat.

Build internally, hit the wall, return to the conversation and compare notes, but not ready to change course.

For most, The pain of AI's inconsistency was tolerable. Or they believed the next attempt would work. Or there was no forcing function.

Then we started engaging with customers in healthcare and financial services. The conversations had a different tone and intent. Almost everyone at this point has tried their own version internally. They understand that demos are cool. They also knew cool demos don't become production systems. The questions these teams asked weren't "can AI do this?" Instead, they focused on "can this be done reliable in our context"

The problem wasn't unique to healthcare. The pressure was.

In most industries, inconsistent AI is frustrating. In regulated industries, it's disqualifying. The compliance officer has veto power. The auditor needs a trail. "We'll improve it over time" isn't an acceptable answer when the regulator asks "why did the system say that?" on day one.

Reliability wasn't a journey for them. It was the starting point.

What This Requires

Healthcare systems need AI that follows clinical protocols. Financial institutions need AI that applies regulatory rules. Pharma companies need AI that adheres to compliance requirements.

These aren't chatbot problems. They're not "find relevant information" problems. They're "prove the system followed the rules" problems. When a compliance officer asks why the AI recommended a specific action, acceptable answers look like:

Acceptable Answers

- Rule 4.2.1 applied because conditions X, Y, and Z were met.

- Section 3.4 takes precedence over Section 2.1 in this case per the conflict resolution hierarchy.

- Recommendation traces to CDC Guidelines 2024, mapped to ABx Stewardship protocol.

Unacceptable Answers

- The model determined this was the best response based on the context

- The retrieved passages supported this conclusion

- The reasoning chain the LLM generated shows its thinking

The first set can be audited, reproduced, and defended. The second cannot. We built for organizations where the second set gets you fired - or fined. Solving this problem imposes architectural constraints:

Core Architectural Constraints

• Knowledge can't live in model weights. You can't inspect it, version it, or update it when regulations change.

• Retrieval can't be the endpoint. Finding relevant information isn't the same as applying it correctly.

• The LLM can't be asked to decide what's true. Generated reasoning isn't auditable reasoning.

• Every conclusion needs a traceable path. Not "here's what we retrieved" but "here's the logical chain from query to conclusion."

Most architectures fail at least one of these requirements. We built an architecture that meets all four.

How We Built It

Concept-Level Embeddings, Not Chunk-Level

Standard Vector RAG embeds chunks of text. A 500-token passage becomes a vector. Retrieval finds similar passages. We embed concepts. The difference isn't just what gets embedded—it's what similarity means and what you get back.

What Gets Embedded

Chunks are arbitrary slices of documents. Concepts are intentionally defined entities - "Diabetes management protocol," "Metformin contraindications," "HbA1c monitoring frequency"- that exist in the ontology before any embedding happens. We're not discovering concepts through embedding. We're making formally-defined entities retrievable.

What Similarity Means

When chunks are similar, it means text patterns are similar. When concepts are similar, it means formally-defined domain entities are semantically related. A query about "blood sugar monitoring" finds relevant concepts even if source documents never used that exact phrase- because the ontology defines the semantic relationship, not the surface text.

What You Get Back

Chunk retrieval gives you text passages. Concept retrieval gives you nodes in a knowledge graph-with relationships, properties, and a defined place in a formal structure.

Ontology-Guided Extraction

We don't let the LLM decide what concepts exist. But we're also not just filtering what it extracts

The ontology enriches extraction with formal meaning. When the LLM extracts "Metformin helps with diabetes" from a clinical document, that's a text triple. Useful, but semantically thin. Our ontology layer grounds it:

- "Metformin" links to its RxNorm concept—now the system knows it's a biguanide antihyperglycemic, with all the properties and relationships that entail.

- "Diabetes" links to its SNOMED-CT concept—now the system knows Type 2 DM is a subtype, with its own clinical criteria and treatment protocols.

- The relationship isn't just a string label anymore. It's a connection between formally-defined clinical entities with inherited properties, hierarchies, and constraints.

Validation

The resulting knowledge graph surfaces to domain experts for review. Nothing enters the queryable knowledge base without expert validation. This is how we handle the reality that extraction involves interpretation - we don't pretend it's perfect, we ensure it's checked.

Semantic flexibility + structural precision

Ontology Governance at Reasoning Time

Retrieved concepts aren't handed to an LLM for synthesis. The ontology governs what the retrieved information means and what conclusions are valid.

If retrieved concepts conflict, the ontology defines precedence rules. If a query requires inference, the ontology defines valid inference paths. The reasoning is executed, not generated.

The LLM isn't asked to decide what's true.

By the time the LLM touches the reasoning step, the hard work is done. It receives

- Structured knowledge (not raw documents)

- Formal relationships (not inferred connections)

- Ontology-governed conclusions (not "figure out what this means")

The LLM's job becomes translation: natural language in, natural language out. The reasoning happens in the structured layer where it can be audited, reproduced, and defended. This isn't about handicapping the LLM. It's about not burdening it with decisions it's bad at making reliably.

A Reasoning Chain Example

Query: "Is antibiotic prescription appropriate for this upper respiratory infection case?"

1. Query parsed → Concepts identified:

[Antibiotic, Upper Respiratory Infection, Prescription Appropriateness]

2. Semantic search → Relevant protocol nodes retrieved:

- URI Clinical Guidelines v2.3

- Antibiotic Stewardship Policy

- Viral vs Bacterial Differentiation Criteria

3. Graph traversal → Connected concepts gathered:

- URI etiology patterns (85% viral)

- First-line treatment recommendations

- Antibiotic indication criteria

- Required documentation for antibiotic prescription

4. Ontology reasoning → Rules applied:

- URI default etiology = viral

- Viral URI → Antibiotics not indicated (Rule 4.2.1)

- Exception criteria checked:[fever >72hrs: No] [bacterial culture: Not performed] [immunocompromised: No]

- No exceptions apply

5. Conclusion: Antibiotic prescription not appropriate per protocol

6. Evidence chain: Rule 4.2.1 ← Stewardship Policy § 4 ← CDC Guidelines 2024

Every step traceable. Same query, same path, same result. The audit trail shows not just what was retrieved, but why it led to this conclusion.

Where We Land

"Strong on reliability, expressiveness, flexibility and medium on instant deployment."

Why Regulated Industries.

We didn't build ontologies from scratch. We didn't have to. Healthcare has SNOMED-CT, ICD-10, LOINC, RxNorm. Financial services has FIBO. Pharma has MedDRA, WHO-Drug. These are production-grade ontologies maintained by standards bodies, developed over decades, used in regulatory submissions worldwide.

The industries that need deterministic AI are the same industries that invested in formal knowledge infrastructure. We leverage what exists. This changes the setup equation. We're not asking customers to spend months building ontologies. We're helping them configure and extend established standards for their specific operational context.

The Honest Tradeoffs

The investment is in understanding, not infrastructure.

The platform itself is agile. Knowledge ingests in real-time. Runtime configurations can be updated, modified, or deleted without rebuilding. New documents flow through established extraction pipelines. The system adapts. What takes time is understanding. Deeply understanding the use case. Mapping the workflow. Identifying which ontologies apply and how they map to your specific operational context. This is the work - and it's work we do together with domain experts, not work we hide behind a deployment timeline.

You can test the platform in a weekend. You can ingest documents, see concepts extracted, run queries, examine reasoning traces. That gives you a feel for what you're looking at.

Production deployment for regulated use cases takes longer—not because the system is slow, but because rigor requires understanding. We'd rather spend weeks getting the ontology mapping right than months debugging why the system gave inconsistent answers in production.

Where We're Not the Right Fit

Ontology selection is real work. Choosing the right ontologies, mapping them to your requirements, validating coverage - this takes weeks, not days. We don't skip this step because it's what makes everything else reliable.

Not suited for domains without established ontologies. If your industry hasn't formalized its knowledge structures, building them from scratch is expensive. We're not the right fit for a domain still defining its vocabulary.

Not suited for exploratory or creative use cases. If you want a system that imagines, riffs, or generates novel ideas, our architecture will feel restrictive. We optimize for correctness, not creativity.

The Diagnostic Questions

On Stakes

- What's the cost of an inconsistent answer? Frustrated user, or regulatory violation?

- Do you need to prove why the system said what it said—to auditors, to legal, to regulators?

- Is "the LLM reasoned through it" an acceptable answer for your compliance team?

On Domain

- Does your industry have established ontologies (healthcare, finance, pharma, life sciences)?

- Are your queries rule-based ("does this meet criteria X?") or exploratory ("what should I think about?")?

- How often does your domain knowledge change—quarterly, annually, rarely?

On Investment

- Do you have access to domain experts who can validate ontology mappings?

- Is your timeline measured in weeks or days?

- Are you solving a problem once (worth the investment) or experimenting broadly (not worth it yet)?

If your answers point toward high stakes, established ontologies, and rule-based queries - this architecture fits. If your answers point toward low stakes, undefined domains, and exploratory queries - a well-implemented LLM-interpreted architecture is probably the right choice. We'd rather help you make the right decision than sell you the wrong solution.

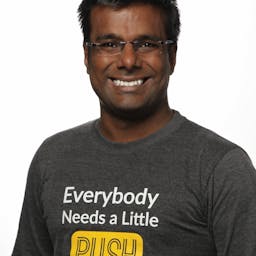

Vivek Khandelwal

2X founder who has built multiple companies in the last 15 years. He bootstrapped iZooto to multi-millons in revenue. He graduated from IIT Bombay and has deep experience across product marketing, and GTM strategy. Mentors early-stage startups at Upekkha, and SaaSBoomi's SGx program. At CogniSwitch, he leads all things Marketing, Business Development and partnerships.